Here is the routine I use to set logging levels. I have a global Dbug level I set depending on what level of debug I want to see. I know it isn’t code efficient but that hasn’t been a problem yet.

void sync_logging_level()

{

// configure default log levels to turn off the chatty logs

log_filter_set(NULL, 0, log_source_id_get("mcuboot"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("mcuboot_util"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("sm_iot"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("battery"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("golioth"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("golioth_system"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("sensor_if"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("app_state"), LOG_LEVEL_DBG);

log_filter_set(NULL, 0, log_source_id_get("app_settings"), LOG_LEVEL_INF);

log_filter_set(NULL, 0, log_source_id_get("app_shell"), LOG_LEVEL_DBG);

if(Dbug > 110) {

LOG_PANIC();

}

if(Dbug < 50) {

log_filter_set(NULL, 0, log_source_id_get("app_state"), LOG_LEVEL_INF);

log_filter_set(NULL, 0, log_source_id_get("battery"), LOG_LEVEL_INF);

log_filter_set(NULL, 0, log_source_id_get("golioth_system"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("golioth_mbox"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("golioth_fw_update"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("golioth_coap_client"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("fw_block_processor"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("golioth_coap_client_zephyr"), LOG_LEVEL_WRN);

//xx log_filter_set(NULL, 0, log_source_id_get("i2c_nrfx_twim"), LOG_LEVEL_NONE);

}

if(Dbug < 25) {

log_filter_set(NULL, 0, log_source_id_get("golioth"), LOG_LEVEL_INF);

if(loopcount > 3) {

log_filter_set(NULL, 0, log_source_id_get("golioth_fw_update"), LOG_LEVEL_WRN);

}

log_filter_set(NULL, 0, log_source_id_get("app_state"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("ds28e18"), LOG_LEVEL_NONE);

log_filter_set(NULL, 0, log_source_id_get("BMP5xx"), LOG_LEVEL_NONE);

log_filter_set(NULL, 0, log_source_id_get("mcuboot_util"), LOG_LEVEL_ERR);

log_filter_set(NULL, 0, log_source_id_get("golioth_coap_client"), LOG_LEVEL_ERR);

log_filter_set(NULL, 0, log_source_id_get("golioth_coap_client_zephyr"), LOG_LEVEL_NONE);

log_filter_set(NULL, 0, log_source_id_get("golioth_sys_zephyr"), LOG_LEVEL_NONE);

}

if(Dbug < 10) {

log_filter_set(NULL, 0, log_source_id_get("golioth"), LOG_LEVEL_ERR);

log_filter_set(NULL, 0, log_source_id_get("golioth_fw_update"), LOG_LEVEL_WRN);

log_filter_set(NULL, 0, log_source_id_get("net_sock"), LOG_LEVEL_NONE);

}

if(Dbug > 40) {

log_printk_enable(false); // do not send logs to Golioth when debug level is too high.

}

else {

log_printk_enable(true);

}

if(Dbug > 60) LOG_INF("log levels synched to debug level.");

return;

}

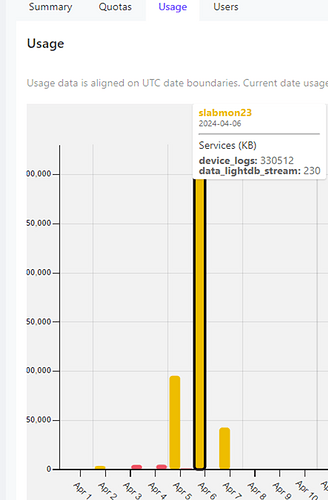

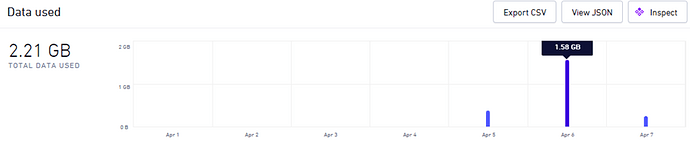

So even with rate limiting this system would generate 0.33GB/day * 30 days * 0.20/MB($200?GB) = $1980 in data charges to Golioth PER DEVICE (Plus cellular charges).

This is a HUGE exposure to someone with a fleet of device that could start accruing this kind pf expense without notice. If there is no way to prevent this in the API then Golioth needs to have all kinds of warnings and BLOG entries on how to mitigate it.